About GEMS

About GEMS AI?

The programme

This lack of interpretability first prevents any questionning to understand why a decision was taken or what are the real causes explaining the decision.

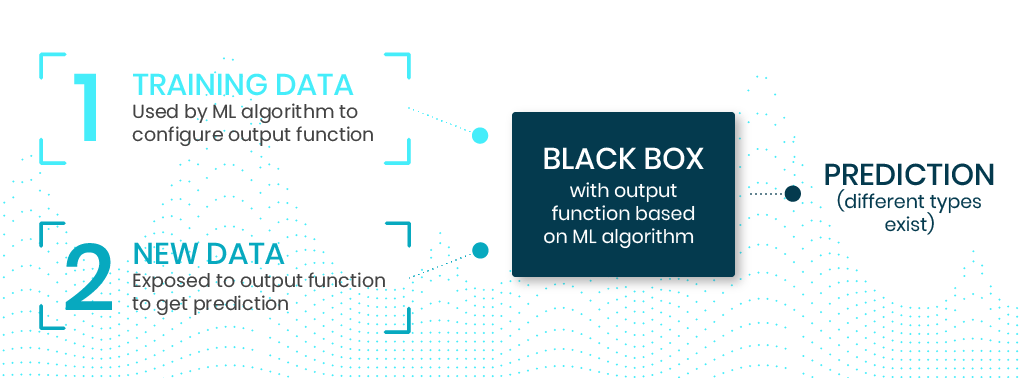

Machine learning algorithms build predictive models which are nowadays usedfor a large variety of tasks. Over the last decades, the complexity of suchalgorithms has grown, going from simple and interpretable prediction models based on regression rules to very complex models such as random forests, gradient boosting, and models using deep neural networks.

Such models are designed to maximize the accuracy of their predictions at the expense of the interpretability of the decision rule. Little is also known about how the information is processed in order to obtain a prediction, which explains why such models are widely considered as black boxes.

Moreover it also gives rise to a severe ethical issue : the equity of the decision. Actually When a decision from an algorithm designed using Artificial Intelligence is applied to the whole population, the algorithm is meant to mimic the behaviour conveyed by the learning sample.

Yet, in many cases, learning samples may present biases either due to the presence of a real but unwanted bias in the observations (societal bias for instance) or due to the way the data are processed (multiple sensors, parallelized inference, evolution in the distribution or unbalanced sample…) Hence Fairness in Machine Learning is deeply related to explainability of models.

Gems-AI toolbox implements the ideas described in the companion paper :

Entropic Variable Projection for Explainability and Intepretability

Hence the goal of this research program is twofold :

1/ first to provide a tool to analyze the reasons why an automated algorithm has made a choice and understand the reasons why. So we provide a framework for both Explainability and Understandability of Artificial Intelligence ;

2/ then we analyze the decision rules and try to detect the effect or causality of each variable influencing the decisions, hence being able to detect possible biases or worse : discrimination.

Fairness & Robustness in Machine Learning

The whole machinery of Machine Learning techniques relies on the fact that a decision rule can be learnt by looking at a set of labeled examples called the learning sample. Then this decision is applied to the whole population which is assumed to follow the same underlying distribution. In many cases, learning samples may present biases either due to the presence of a real but unwanted bias in the observations (societal bias for instance) or due to the way the data are processed (multiple sensors, parallelized inference, evolution in the distribution or unbalanced sample…) Hence the goal of this research is twofold: to detect, analyze and remove such biases, which is called fair leaning ; then understand the way the biases are created and provide more robust, certifiable and explainable methods to tackle the distributional effects in machine learning including transfert learning, consensus learning, theoretical bounds and robustness.

Applications to Societal issues of Artificial Intelligence but also to Industrial Applications.

Keywords : machine Learning, Optimal Transport, Wasserstein Barycenter, Transfert Learning, Adversarial Learning, Robustness

Research Program for Fairness

Organization of CIMI Fairness Seminar for AOC members (2017-2018)

Theoretical properties of fair learning

* Using optimal transport Theory

- del Barrio (U. Valladolid), P. Gordaliza (U. Valladolid-Phd), F. Gamboa)

– OT Theory is a strong theme in Mathematical Institute of Valladolid

– Ensuring Fairness with OT for classification

– Fairness in regression (work in progress)

Fairness in clustering and allocation problems

* Fair clustering

- del Barrio and H. Inouzhe (U. Valladolid-Phd)

– Fairness in predictive police private report for French Ministry of National Security with C. Castets-Renard (UT1), P. Besse (INSA), L. Perrusel (UT1)

* Tests and Wasserstein distance with P. Besse, E. del Barrio, P. Gordaliza

– CLT for Wasserstein distance,

– CLT and application to Fairness

– Confidence Interval for Disparate Impact Estimation

* Error bounds for Fair bandits algorithms work in progress with G. Fortin-Stolz (Orsay)

New feasible algorithms to promote fairness

* With adversarial network

Work in progress with E. Pauwels (IRIT),

- Serrurier (IRIT)

* With Optimal Transport Cost penalty

Work in progress with L. Risser (CNRS),

- Couellan (ENAC)

Fairness and Legal Issues

* paper with C. Castets-Renard (UT1), P. Besse (INSA),

- Garivier (ENS Lyon)

Explainable IA

* Explainable IA

paper with F. Bachoc (IMT) F. Gamboa and L.Risser.

We promote global explainability by stressing the variables of a black-box model in order to analyze their particular effect in the decision rule, toolbox coming soon.

Consensus and transfer Learning using OT

Work in progress with H. Inouzhe, E. del Barrio, C. Matrán (U. Valladolid) A. Mayo-Iscar (U. Valladolid)

This Research won CNRS innovation prize (project Ethik-IA)

CHAIRE & TEAM

This work is done at the Toulouse Mathematics Institute. It is supported by the Centre National de la Recherche Scientifique (CNRS) and in collaboration with the Artificial and Natural Intelligence Toulouse Institute (ANITI) project.

Jean-Michel LOUBES

+

Max HALFORD

+

François BACHOC

+

Laurent RISSER

+

Fabrice GAMBOA

+

PRESS

Le Monde’s newspaper (02/2019)

Un algorithme peut-il se montrer injuste ?

Le Monde’s newspaper (02/2019)

Les bugs de l’intelligence artificielle

Code source on GitHub

You can find onGitHub : theIntroduction, Installation guide, User guide (Measuring model influence, Evaluating model reliability, Support for image classification), Authors and License.

Contact us

Toulouse

Mathematics Institute

118 Rte de Narbonne

31400 Toulouse - FRANCE

contact@gems-ai.com